With the advent of the era of big data, the need for network information has increased widely. Many different companies collect external data from the Internet for various reasons: analyzing competition, summarizing news stories, tracking trends in specific markets, or collecting daily stock prices to build predictive models. Therefore, web crawlers are becoming more important. Web crawlers automatically browse or grab information from the Internet according to specified rules.

Classification of web crawlers

According to the implemented technology and structure, web crawlers can be divided into general web crawlers, focused web crawlers, incremental web crawlers, and deep web crawlers.

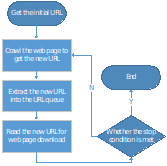

The basic workflow of web crawlers

The basic workflow of general web crawlers

The basic workflow of a general web crawler is as follows:

Get the initial URL. The initial URL is an entry point for the web crawler, which links to the web page that needs to be crawled;

While crawling the web page, we need to fetch the HTML content of the page, then parse it to get the URLs of all the pages linked to this page.

Put these URLs into a queue;

Loop through the queue, read the URLs from the queue one by one, for each URL, crawl the corresponding web page, then repeat the above crawling process;

Check whether the stop condition is met. If the stop condition is not set, the crawler will keep crawling until it cannot get a new URL.

Environmental preparation for web crawling

Make sure that a browser such as Chrome, IE, or others has been installed in the environment.

Download and install Python

Download a suitable IDL

This article uses Visual Studio CodeInstall the required Python packages

Pip is a Python package management tool. It provides functions for searching, downloading, installing, and uninstalling Python packages. This tool will be included when downloading and installing Python. Therefore, we can directly use ‘pip install’ to install the libraries we need.

1 2 3pip install beautifulsoup4 pip install requests pip install lxml

• BeautifulSoup is a library for easily parsing HTML and XML data.

• XML is a library to improve the parsing speed of XML files.

• requests is a library to simulate HTTP requests (such as GET and POST). We will mainly use it to access the source code of any given website.

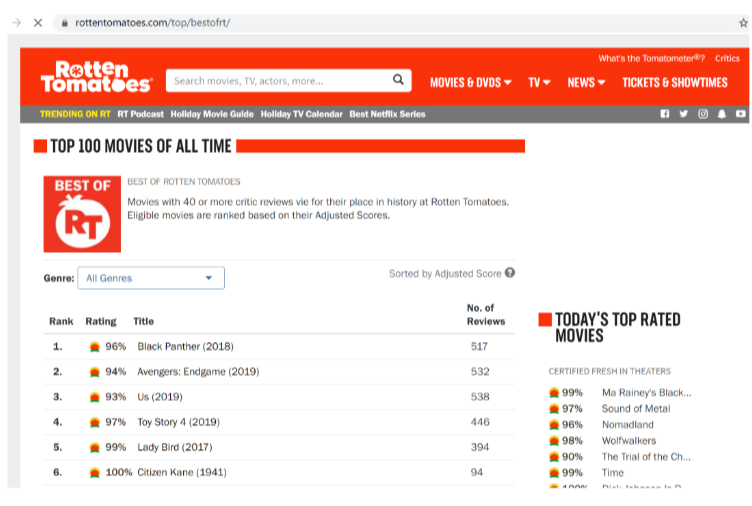

The following is an example of using a crawler to crawl the top 100 movie names and movie introductions on Rotten Tomatoes.

Top100 movies of all time –Rotten Tomatoes

We need to extract the name of the movie on this page and its ranking, and go deep into each movie link to get the movie’s introduction.

1. First, you need to import the libraries you need to use.

1 2 3 4import requests import lxml from bs4 import BeautifulSoup

2. Create and access URL

Create a URL address that needs to be crawled, then create the header information, and then send a network request to wait for a response.

1 2url = "https://www.rottentomatoes.com/top/bestofrt/" f = requests.get(url)

When requesting access to the content of a webpage, sometimes you will find that a 403 error will appear. This is because the server has rejected your access. This is the anti-crawler setting used by the webpage to prevent the malicious collection of information. At this time, you can access it by simulating the browser header information.

1 2 3 4 5url = "https://www.rottentomatoes.com/top/bestofrt/" headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36 QIHU 360SE' } f = requests.get(url, headers = headers)

3. Parse webpage

Create a BeautifulSoup object and specify the parser as lxml.soup = BeautifulSoup(f.content,'lxml')

4. Extract information

The BeautifulSoup library has three methods to find elements:

findall() :find all nodes

find() :find a single node

select() :finds according to the selector CSS Selector

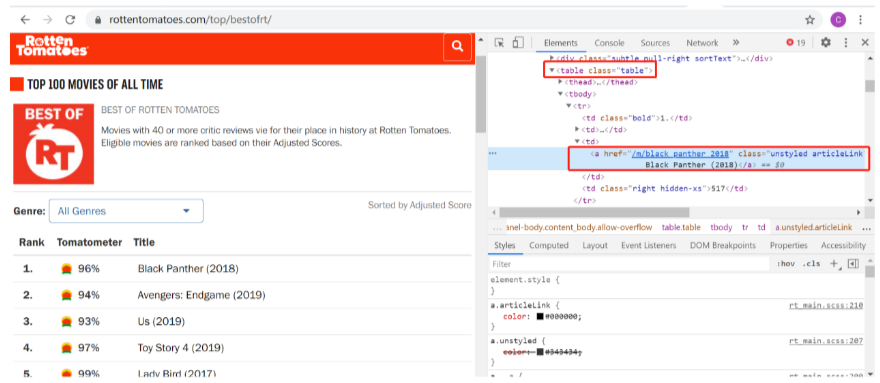

We need to get the name and link of the top100 movies. We noticed that the name of the movie needed is under

. After extracting the page content using BeautifulSoup, we can use the find method to extract the relevant information.movies = soup.find('table',{'class':'table'}).find_all('a')

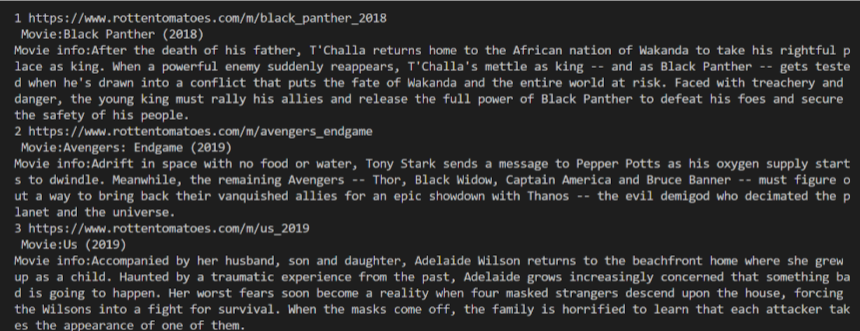

Get an introduction to each movie

After extracting the relevant information, you also need to extract the introduction of each movie. The introduction of the movie is in the link of each movie, so you need to click on the link of each movie to get the introduction.

The code is:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

import requests

import lxml

from bs4

import BeautifulSoup

url = "https://www.rottentomatoes.com/top/bestofrt/"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36 QIHU 360SE'

}

f = requests.get(url, headers = headers)

movies_lst = []

soup = BeautifulSoup(f.content, 'lxml')

movies = soup.find('table', {

'class': 'table'

})

.find_all('a')

num = 0

for anchor in movies:

urls = 'https://www.rottentomatoes.com' + anchor['href']

movies_lst.append(urls)

num += 1

movie_url = urls

movie_f = requests.get(movie_url, headers = headers)

movie_soup = BeautifulSoup(movie_f.content, 'lxml')

movie_content = movie_soup.find('div', {

'class': 'movie_synopsis clamp clamp-6 js-clamp'

})

print(num, urls, '\n', 'Movie:' + anchor.string.strip())

print('Movie info:' + movie_content.string.strip())

The output is:

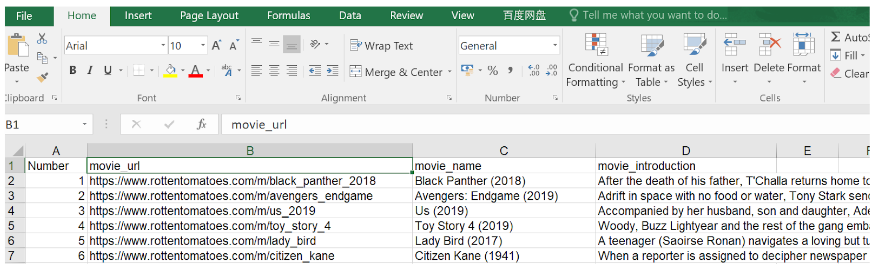

Write the crawled data to Excel

In order to facilitate data analysis, the crawled data can be written into Excel. We use xlwt to write data into Excel.

Import the xlwt library.

from xlwt import *

Create an empty table.

1

2

3

4

5

6

7

8

9

10

11

12

13

workbook = Workbook(encoding = 'utf-8')

table = workbook.add_sheet('data')

Create the header of each column in the first row.

table.write(0, 0, 'Number')

table.write(0, 1, 'movie_url')

table.write(0, 2, 'movie_name')

table.write(0, 3, 'movie_introduction')

Write the crawled data into Excel separately from the second row.

table.write(line, 0, num)

table.write(line, 1, urls)

table.write(line, 2, anchor.string.strip())

table.write(line, 3, movie_content.string.strip())

line += 1

Finally, save Excel.workbook.save('movies_top100.xls')

The final code is:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

import requests

import lxml

from bs4

import BeautifulSoup

from xlwt

import *

workbook = Workbook(encoding = 'utf-8')

table = workbook.add_sheet('data')

table.write(0, 0, 'Number')

table.write(0, 1, 'movie_url')

table.write(0, 2, 'movie_name')

table.write(0, 3, 'movie_introduction')

line = 1

url = "https://www.rottentomatoes.com/top/bestofrt/"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36 QIHU 360SE'

}

f = requests.get(url, headers = headers)

movies_lst = []

soup = BeautifulSoup(f.content, 'lxml')

movies = soup.find('table', {

'class': 'table'

})

.find_all('a')

num = 0

for anchor in movies:

urls = 'https://www.rottentomatoes.com' + anchor['href']

movies_lst.append(urls)

num += 1

movie_url = urls

movie_f = requests.get(movie_url, headers = headers)

movie_soup = BeautifulSoup(movie_f.content, 'lxml')

movie_content = movie_soup.find('div', {

'class': 'movie_synopsis clamp clamp-6 js-clamp'

})

print(num, urls, '\n', 'Movie:' + anchor.string.strip())

print('Movie info:' + movie_content.string.strip())

table.write(line, 0, num)

table.write(line, 1, urls)

table.write(line, 2, anchor.string.strip())

table.write(line, 3, movie_content.string.strip())

line += 1

workbook.save('movies_top100.xls')

The result is:

0 Comments

Please do not enter any spam link in the comment box.